Interesting Image Styles and Neural Networks

The Neural Network, with super fast growth, has been put in many applications in especially computer vision area, Image Style Transfer is a really interesting one among those applications. Imagine you hired a painter to draw something in some specific styles such as Impressionism, but in this case, the “style” would be more specific to one image.

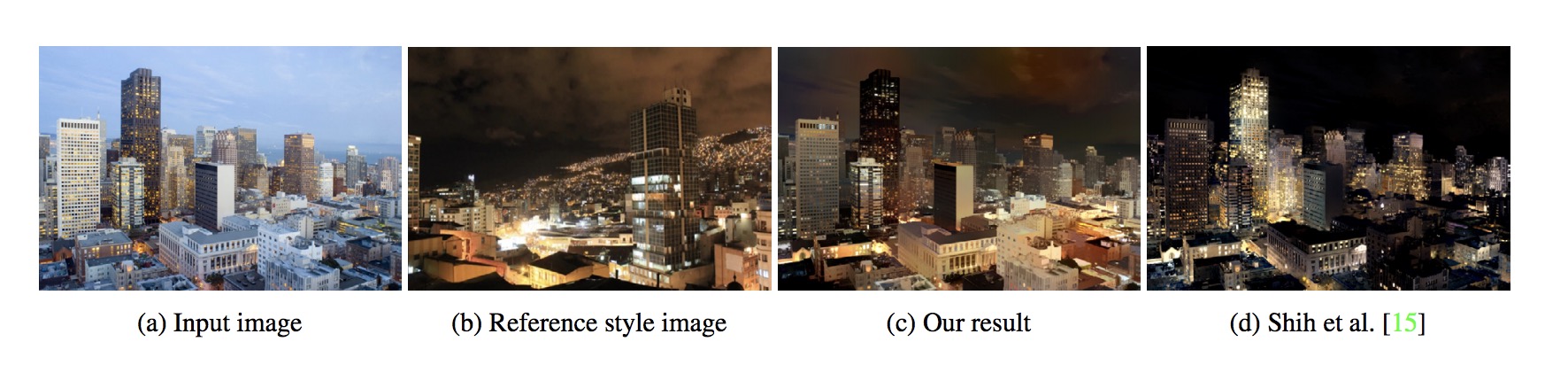

Image Source

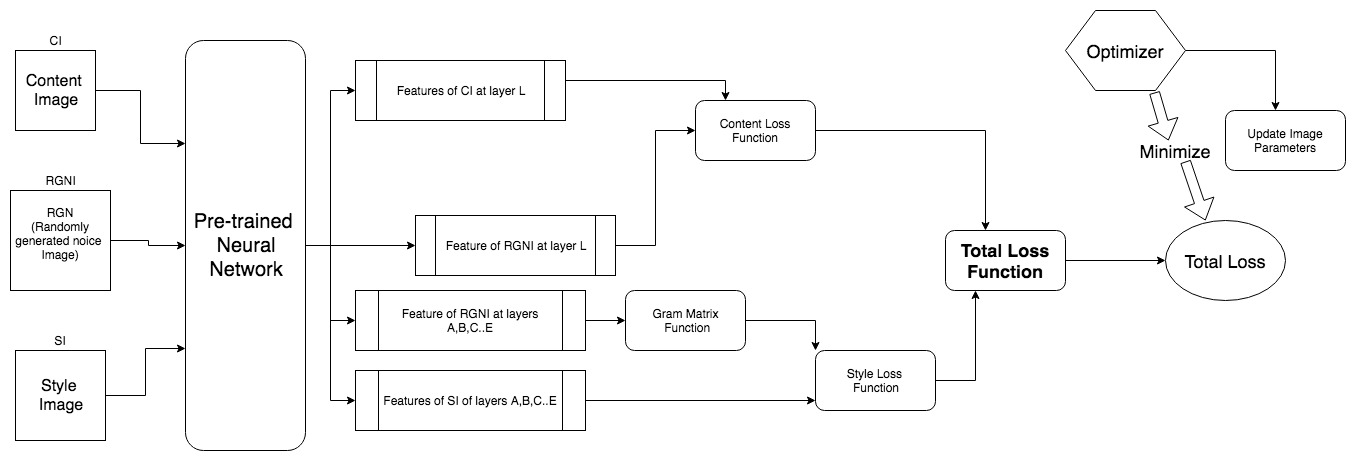

According to the paper Image Style Transfer Using Convolutional Neural Networks, when we apply the convolutional neural network to some functions such as object recognition, a representation of the object will be generated to “make the image information increasingly explicit along processing hierarchy”(3). When we are trying to transfer the style of a painting to, let’s say, a photograph, we get content representations of both images and, at the same time, generate a new image (a white noise image), making this new image match the representations of those two images more and more.

Stucture:

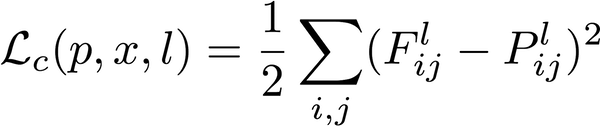

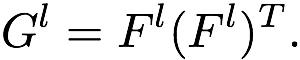

The whole loss consists of three parts: content loss, style loss and the total loss, the relationships of these three parts and how they are gonna work together can be depicted in the figure below:

From Walid Ahmad’s blog about Style Transfer using Keras, the all the equations are interpreted pretty well.

Content Loss:

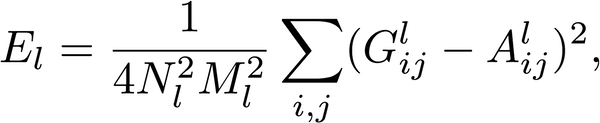

Gram Matrix:

Style Loss:

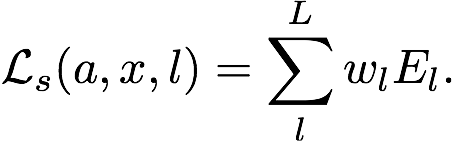

Total Loss:

Networks

There are quite a few options for Pre-trained Neural Network, here I am going to talk about some of them.

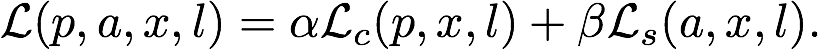

VGG 16

VGG 16 is the most popular neural network to extract features of images in style transfering, the VGG 16, with totally 19 layers, mostly consists of 3*3 convolutional layers and maxpooling layers, with deeper and smaller filters and fewer parameters through each filter, it has more non-linearities

There are also many resources about style transfer implemented with VGG16, the 19 layers are divided into 5 blocks, to calculate the content loss, people usually pick the output of some convolutional layer in block 4 as the layer they get the features to plug into the equation, for style loss, usually the output of the first convolutional layer in each block is picked to form the style layer set. But it's not necessarily the only way to pick it, the results would vary based on what layers we pick for the loss calculation.

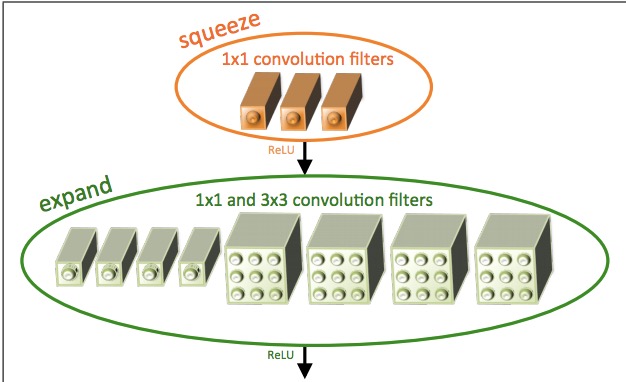

SqueezeNet

SqueezeNet is another excellent architecture of neural network with very small size trained on ImageNet. Nowadays, SqueezeNet has made a huge impact with its unique convenience and power.

The SqueezeNet uses 1*1 convolutional layers instead of 3*3 so that its size is much smaller and less computation is involved while the feature map is respectively bigger. If you have done the Style Transfer assignment of Stanford CS231n, you probably remember the SqueezeNet was used in that assignment due to its efficiency.

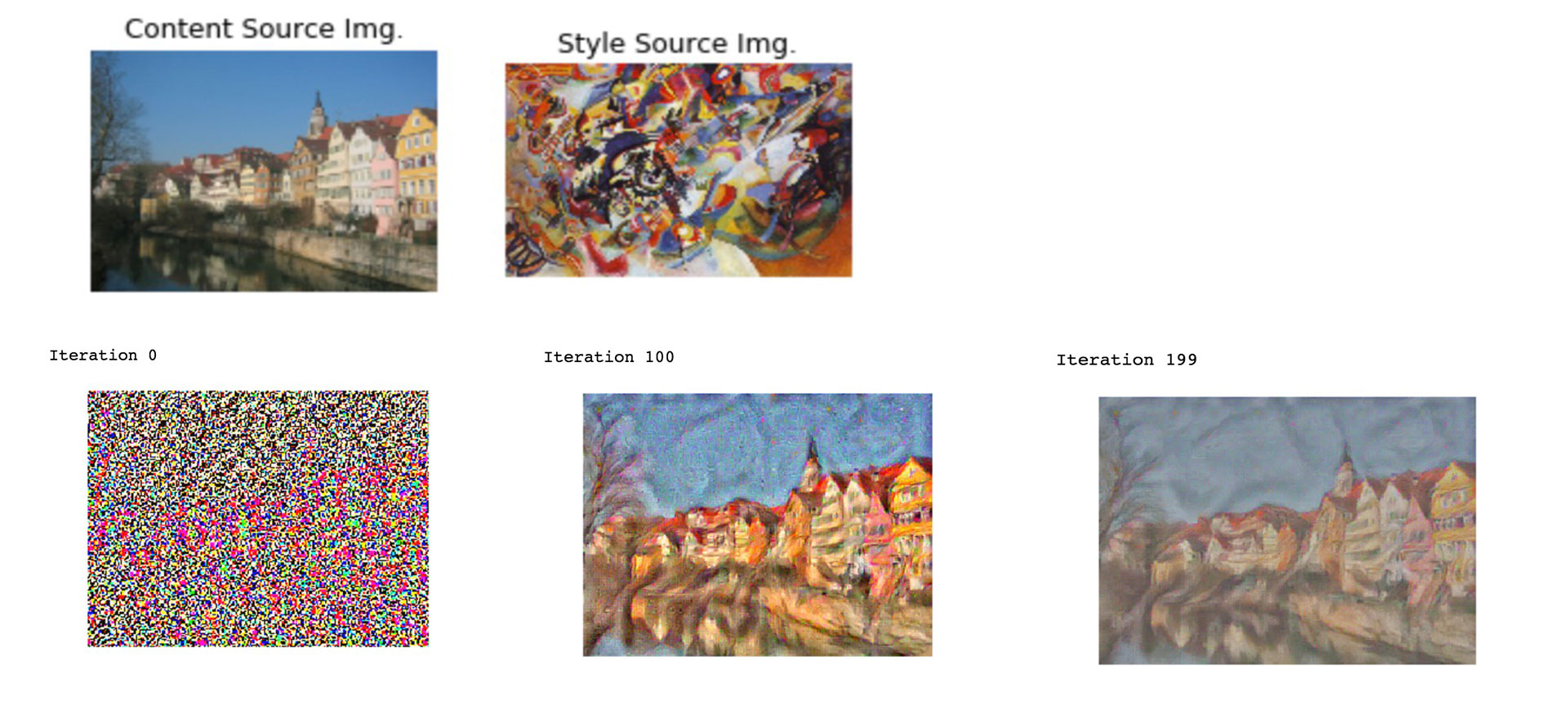

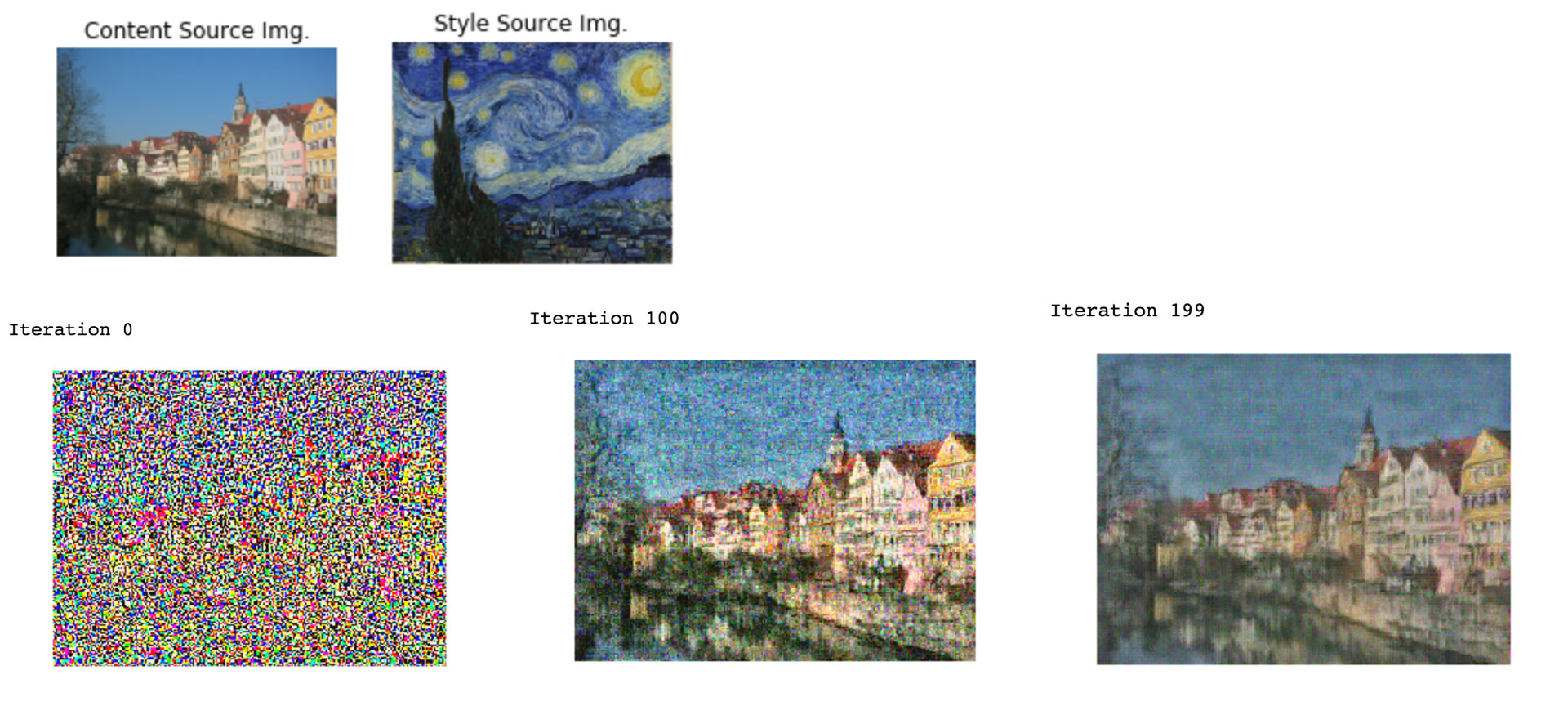

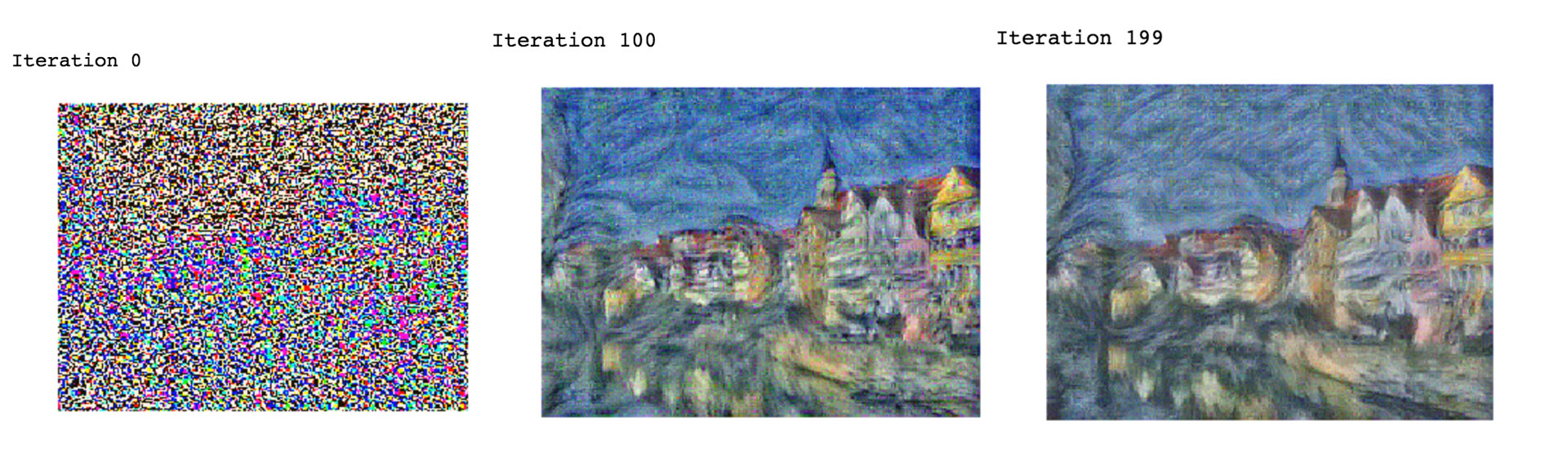

Results

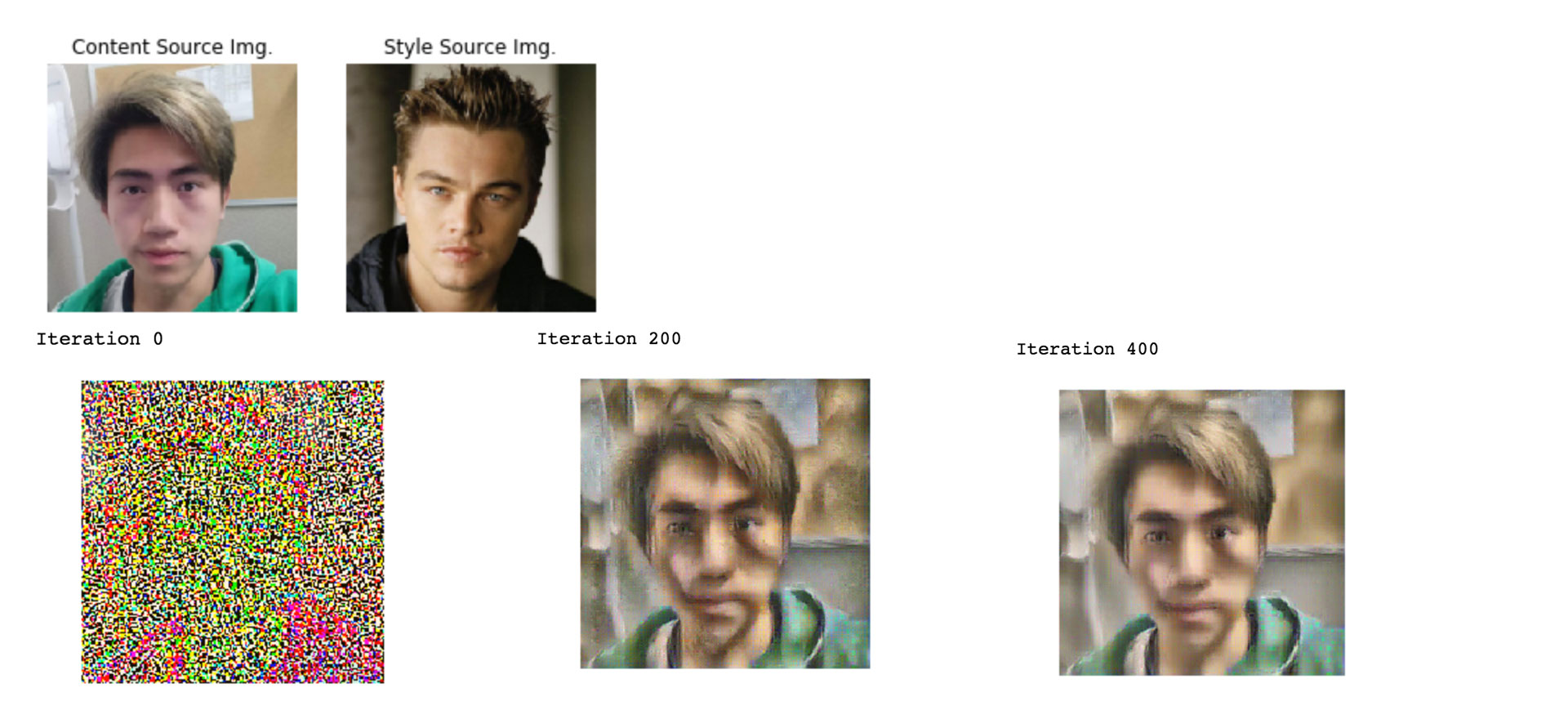

If it works well, you will see something like the image below, this was experiemented with SqueezeNet.

By changing weights of style image for the style layer set and weight of content image, you can see some difference like below:

But this technique is only limited for overall effect of the whole image, instead of some specified features.

Obviously, this didn't work... Looks like the computer knows there is no way that a combination of such two perfect faces exists.

Suggested Reading:

References:1. Image Style Transfer Using Convolutional Neural Networkse

2. Deep Photo Style Transfer

3. Making AI Art with Style Transfer using Keras

Suggested Reading(Including References):

1. Convolutional neural networks for artistic style transfer

2. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and 0.5MB model size

3. Artistic Style Transfer